Accelerating Diffusion Sampling: From Multi-Step to Single-step Generation

📅 Published: | 🔄 Updated:

📘 TABLE OF CONTENTS

- Part I — Foundation and Preliminary

- 1. Introduction

- 2. Limitations of Classical Generative Models

- 3. The Rise of Diffusion Models

- 4. The Dual Origins of Diffusion Sampling

- Part II — Fast Sampling without Retraining

- Part III — Distillation for Fast Sampling

- 8. Trajectory-Based Distillation

- 9. Adversarial-Based Distillation

- 10. Distribution-Based Distillation

- 11. Score-Based Distillation

- 11.1 Unifying View: Distill the Score Residual

- 11.2 A Common Objective: Score Divergence

- 11.3 A Canonical Training Algorithm (Template)

- 11.4 Where Methods Differ

- 11.5 Score Distillation for Fast Sampling

- 11.6 Score Distillation for Guidance and Editing

- 11.7 Acceleration vs Guidance: Common Core, Different Endgame

- 12. Consistency-Based Distillation

- Part IV — Flow Matching: Looking for Straight Trajectory

- Part V — From Trajectory to Operator

- 15. References

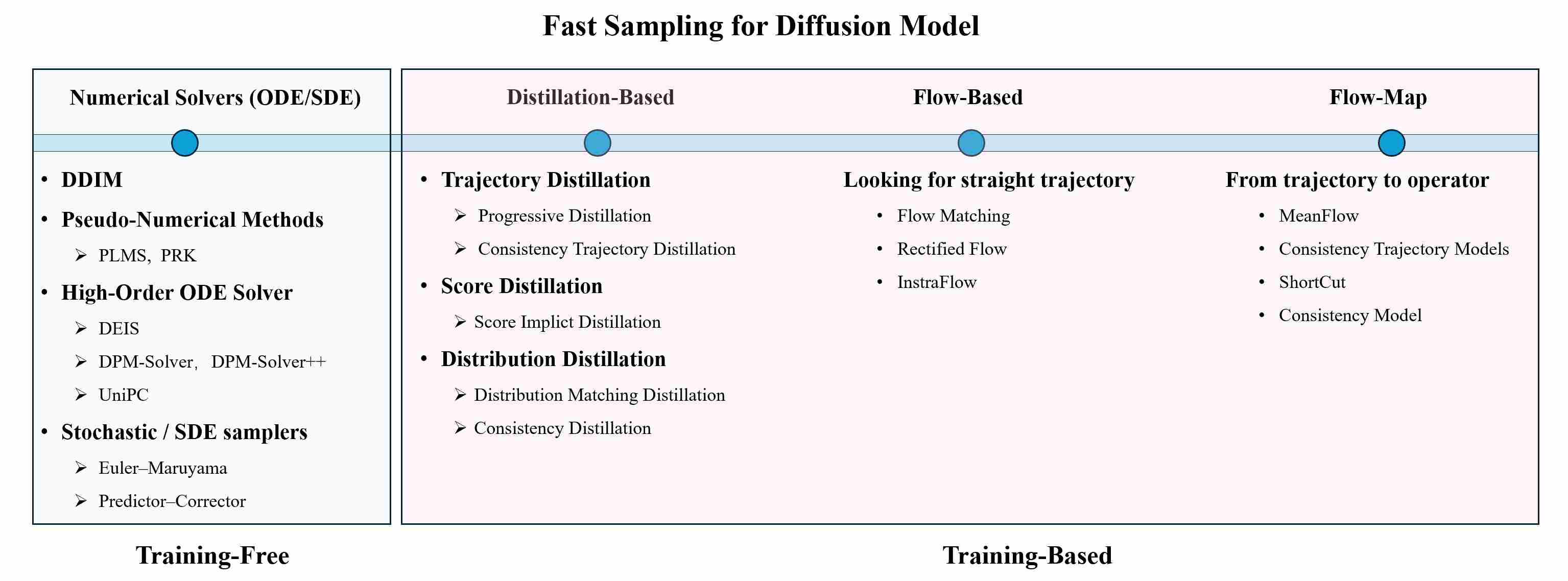

This article systematically reviews the development history of sampling techniques in diffusion models. Starting from the two parallel technical routes of score-based models and DDPM, we explain how they achieve theoretical unification through the SDE/ODE framework. On this basis, we delve into various efficient samplers designed for solving the probability flow ODE (PF-ODE), analyzing the evolutionary motivations from the limitations of classical numerical methods to dedicated solvers such as DPM-Solver. Subsequently, the article shifts its perspective to innovations in the sampling paradigm itself, covering cutting-edge technologies such as consistency models and sampling distillation aimed at achieving single-step/few-step generation. Finally, we combine practical strategies such as hybrid sampling and guidance, conduct a comprehensive comparison of existing methods, and look forward to future research directions such as learnable samplers and hardware-aware optimizations.

Part I — Foundation and Preliminary

1. Introduction

The history of diffusion model sampling is a story of a relentless quest for an ideal balance within a challenging trilemma: Speed vs. Quality vs. Diversity.

Speed (Computational Cost): The pioneering DDPM (Denoising Diffusion Probabilistic Models) demonstrated remarkable generation quality but required thousands of sequential function evaluations (NFEs) to produce a single sample. This computational burden was a significant barrier to practical, real-time applications and iterative creative workflows. Consequently, the primary driver for a vast body of research has been the aggressive reduction of these sampling steps.

Quality (Fidelity): A faster sampler is useless if it compromises the model’s generative prowess. The goal is to reduce steps while preserving, or even enhancing, the fidelity of the output. Many methods grapple with issues like error accumulation, which can lead to blurry or artifact-laden results, especially at very low step counts. High-quality sampling means faithfully following the path dictated by the learned model.

Diversity & Stability: Sampling can be either stochastic (introducing randomness at each step) or deterministic (following a fixed path for a given initial noise). Stochastic samplers can generate a wider variety of outputs from the same starting point, while deterministic ones offer reproducibility. The choice between them is application-dependent, and the stability of the numerical methods used, especially for high-order solvers, is a critical area of research.

This perpetual negotiation between speed, quality, and diversity has fueled a Cambrian explosion of innovative sampling algorithms, each attempting to push the Pareto frontier of what is possible.

2. Classical Generative Models

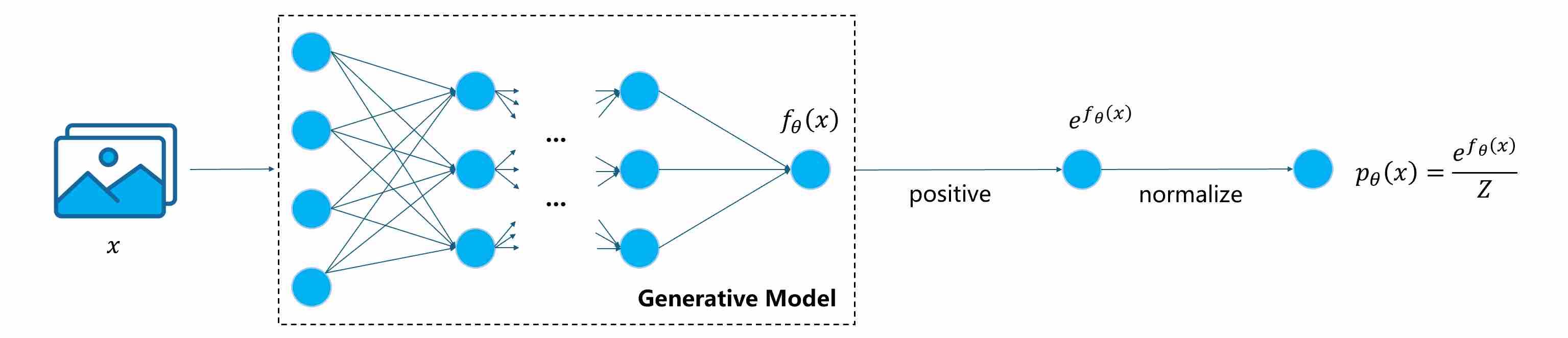

The essence of any generative model is to build a distribution $p_{\theta}(x)$ to approximate the true data distribution $p_{\text{data}}(x)$, that is,

\[p_{\theta}(x) \approx p_{\text{data}}(x)\]Once we can evaluate or sample from $p_{\theta}(x)$, we can synthesize new, realistic data.

However, modeling high-dimensional probability densities is notoriously difficult for several intertwined reasons:

Intractable Normalization Constant. Many distributions can be expressed as

\[p_{\theta}(x)=\frac{1}{Z}\,\exp (f_{\theta}(x))\]where

\[Z=\int \exp (f_{\theta}(x))dx\]is the partition function. Computing $Z$ in high-dimensional space is intractable, making likelihood evaluation and gradient computation (with respect to $\theta$, not $x$) expensive.

Curse of Dimensionality. Real-world data (images, audio, text) lies near intricate low-dimensional manifolds within vast ambient spaces. Directly fitting a normalized density over the full space is inefficient and unstable.

Difficult Likelihood Optimization. To solve $p_{\theta}$, the most common strategy is to optimize $\log p_{\theta}(x)$ (Maximum likelihood estimation). However, maximizing $\log p_{\theta}(x)$ requires differentiating through complex transformations or Jacobian determinants—tractable only for carefully designed, such as flow models.

Although directly estimating the full data density is nearly impossible in high-dimensional space, different classes of generative models have developed specialized mechanisms to avoid or bypass the core obstacles outlined above.

Variational Autoencoders (VAEs): Approximating the Density via Latent Variables. VAEs sidestep the intractable normalization constant by introducing a latent variable $z$ and decomposing the joint distribution as

\[p_\theta(x,z)=p_\theta(x \mid z) \cdot p(z),\]Instead of maximizing $\log p_\theta(x)$ directly, the VAE introduces an approximate posterior $q_\phi(z\mid x)$ and applies Jensen’s inequality:

\[\mathcal L_{\text{MLE}} = \log p_\theta(x) \ge \underbrace{ \mathbb E_{q_\phi(z \mid x)}[\log p_\theta(x \mid z)] - \mathrm{KL}(q_\phi(z \mid x) \mid p(z))}_{\mathcal L_{\text{VLB}}}.\]Optimizing $\mathcal L_{\text{MLE}}$ requires to compute the gradient of $Z$, which is usually intractable. However, $\mathcal L_{\text{VLB}}$ contains only terms that are individually normalized and easy to compute:

- $p(z)$ is a simple prior, typically $\mathcal N(0,I)$.

$q_\phi(z \mid x)$ is an approximate posterior, which is chosen from a tractable family, e.g.

\[q_\phi(z\mid x) = \mathcal{N}(z; \mu_\phi(x), \mathrm{diag}(\sigma_\phi^2(x))).\]Its log-density is explicitly computable and differentiable with respect to $\phi$.

$\log p_\theta(x \mid z)$ is conditional likelihood, which is typically Gaussian or Bernoulli. Since the reconstruction loss is simply the negative log-likelihood of the assumed data distribution given the latent variable. the choice of conditional likelihood determines the form of the reconstructed loss function.

Conditional Likelihood $p_\theta(x \mid z)$ Reconstruction Loss $\mathcal{N}(x; \mu_\theta(z), \sigma^2 I)$ Mean Squared Error (MSE) $\mathrm{Bernoulli}(x; \pi_\theta(z))$ Binary Cross Entropy (BCE) $\mathrm{Categorical}(x; \pi_\theta(z))$ Cross Entropy $\mathrm{Laplace}(x; \mu_\theta(z), b)$ L1 Loss

Every term in the ELBO depends only on distributions that we define ourselves with explicit normalization constants, this turns an intractable integral into a differentiable objective. Sampling becomes straightforward: draw $z\,\sim\,p(z)$ and decode $x\,\sim\,p_\theta(x \mid z)$. The cost is blurriness in samples—an inevitable consequence of the Gaussian decoder and variational approximation.

Normalizing Flows: Enforcing Exact Likelihood via Invertible Transformations. Flow-based models make the density tractable by designing the generative process as a sequence of invertible mappings:

\[x = f_\theta(z),\qquad z\,\sim\,p(z).\]The change-of-variables formula yields an explicit, exact log-likelihood:

\[\log p_\theta(x)=\log p(z)-\log|\det J_{f_\theta}(z)|.\]Thus, both density evaluation and sampling are efficient and exact. However, this convenience comes with architectural constraints: each transformation must be bijective with a Jacobian determinant that is computationally cheap to compute. To maintain this property, Flow models (e.g., RealNVP, Glow) restrict the network’s expressiveness compared with unconstrained architectures.

Generative Adversarial Networks (GANs): Learning without a Normalized Density. GANs abandon likelihoods altogether. A generator $G_\theta(z)$ learns to produce samples whose distribution matches the data via an adversarial discriminator $D_\phi(x)$:

\[\min_G\max_D ; \mathbb E_{x\sim p_{\text{data}}}\,[\log D(x)] +\mathbb E_{z\sim p(z)}\,[\log(1-D(G(z)))].\]This implicit likelihood approach avoids computing $Z$, $\log p(x)$, or any Jacobian. Sampling is trivial: feed a random latent $z$ through the generator. Yet, the absence of an explicit density leads to instability and mode collapse—the model may learn to generate only a subset of modes of the true distribution.

Energy-Based Models (EBMs): Modeling Unnormalized Densities with MCMC Sampling. EBMs keep the simplest formulation of density—an unnormalized energy function $U_\theta(x)$—but delegate sampling to iterative stochastic processes like Langevin dynamics or Contrastive Divergence. The model defines:

\[p_\theta(x)=\frac{1}{Z_\theta}\exp(-U_\theta(x)).\]Because $Z_\theta$ is intractable, training relies on estimating gradients using samples from the model distribution, obtained via slow MCMC chains. This approach retains full modeling flexibility but sacrifices sampling speed and stability.

3. The Rise of Diffusion Models

Despite their conceptual elegance and individual successes, the classical families of generative models share a common limitation: they struggle to simultaneously ensure expressivity, stability, and tractable likelihoods.

VAEs approximate densities but pay the price of blurry reconstructions due to their variational relaxation.

Flow models achieve exact likelihoods, yet the requirement of invertible, volume-preserving mappings imposes severe architectural rigidity.

GANs generate sharp but unstable samples, suffering from mode collapse and non-convergent adversarial dynamics.

EBMs enjoy the most general formulation but depend on inefficient MCMC sampling and are notoriously difficult to train at scale.

These obstacles reveal a deeper dilemma: a good generative model must learn both an expressive data distribution and an efficient way to sample from it, but existing paradigms could rarely achieve both at once.

It was precisely this tension—between tractable density estimation and efficient, stable sampling—that motivated the birth of diffusion-based generative modeling. Diffusion models approach generation from an entirely different angle: rather than directly parameterizing a complex distribution, they construct it through a gradual denoising process, transforming simple noise into structured data via a sequence of stochastic or deterministic dynamics. In doing so, diffusion models inherit the statistical soundness of energy-based methods, the stability of likelihood-based training, and the flexibility of implicit generation—thereby overcoming the long-standing trade-offs that constrained previous approaches.

4. The Dual Origins of Diffusion Sampling

Before diffusion models became a unified SDE framework, two independent lines of thought evolved in parallel: One sought to model the gradient of data density in continuous space; the other built an explicit discrete Markov chain that learned to denoise. Both added noise, both removed it—yet for profoundly different reasons.

4.1 The Continuous-State Perspective: Score-Based Generative Models

The first paradigm was rooted in a fundamental question: if we had a function that could always point us toward regions of higher data probability, could we generate data by simply “climbing the probability hill”?

4.1.1 A Radical Shift — Learning the Gradient of data density

Directly modeling $p(x)$ is both theoretically and practically challenging, traditional generative models bypass these challenges through approximation or network structure constraints, but this also limits the model’s performance. One idea is that we do not learn the probability density, but instead learn the gradient of the probability density (also known as score function).

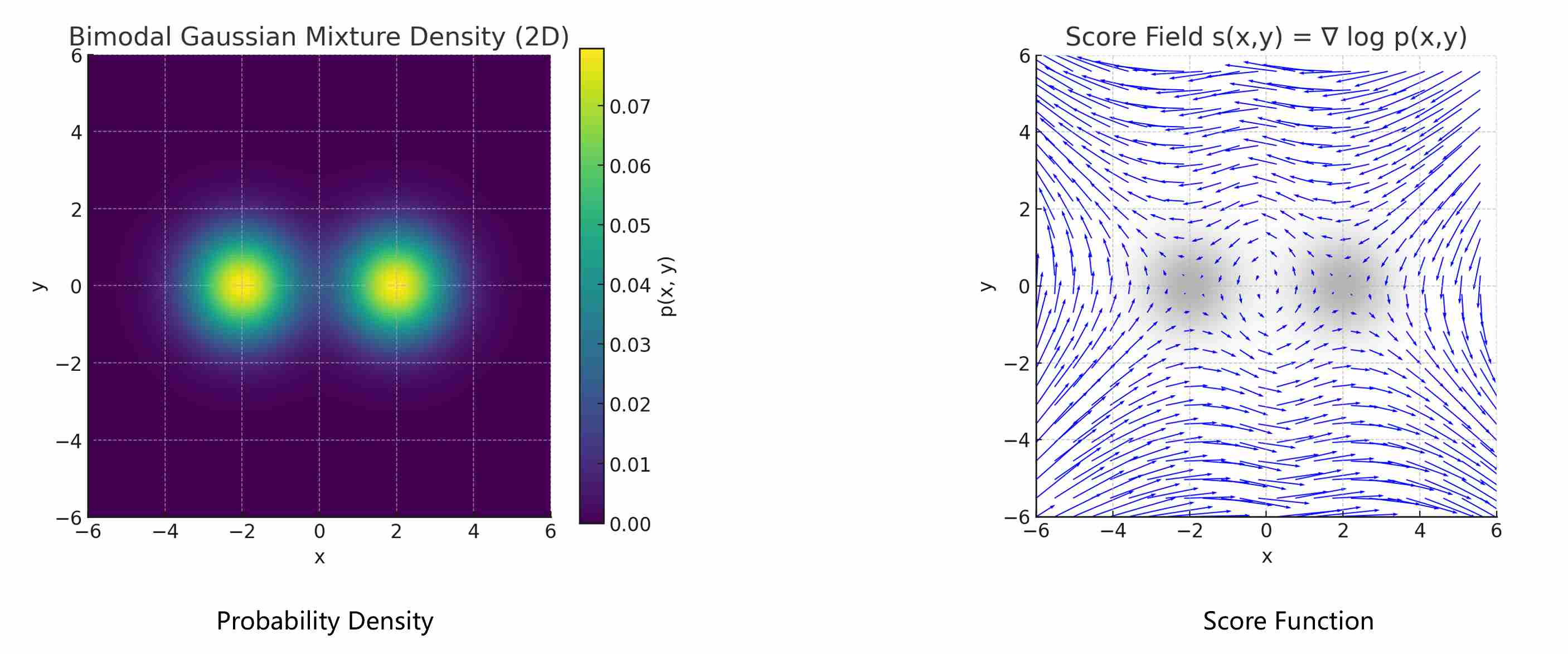

The score function, defined as

\[s(x)=\nabla_x\log p(x)\]As shown in the following figure, score function is a vector field which points toward regions of higher data probability. Learning this vector field—rather than the full density—offers two decisive advantages.

Independence from the Normalization Constant. Taking the gradient of $\log p(x)$ removes the partition function:

\[\nabla_x\log p(x) =\nabla_x\log \exp{(f_{\theta}(x))}-\nabla_x\log Z =\nabla_x\log f_{\theta}(x),\]because $Z$ is constant w.r.t. $x$. Thus, we can learn meaningful gradients without ever computing $Z$.

Direction Instead of Magnitude. The score tells us which direction in data space increases probability density the fastest. It defines a probability flow that guides samples toward high-density regions—akin to an energy-based model whose energy is \(U(x)=-\log p(x)\).

The most important one, is that the probability density and the score provide almost the same useful information, that’s because the score function and the probability density function can be converted between each other: Given the probability density, we can obtain the score by taking the derivative; conversely, given a score, we can recover the probability density by computing integrals.

4.1.2 Score Matching Generative Model

At the heart of this approach lies a powerful mathematical object: the score function, defined as the gradient of the log-probability density of the data, $\nabla_x \log p_{\text{data}}(x)$. Intuitively, the score at any point $x$ is a vector that points in the direction of the steepest ascent in data density. To calculate the score function of any input, we train a neural network $s_{\theta}(x)$ (score model) to learn the score

\[s_{\theta}(x) \approx \nabla_x \log p_{\text{data}}(x)\label{eq:1}\]The loss function is to minimize the Fisher divergence between the true data distribution and the model predicted output:

\[\mathcal{L}(\theta) = \mathbb{E}_{x \sim p_{\text{data}}}\left[ \big\|\,s_{\theta}(x) - \nabla_x \log p_{\text{data}}(x)\,\big\|_2^2 \right]\label{eq:2}\]The challenge, however, is that we do not know the true data distribution $p_{\text{data}}(x)$. This is where Score Matching comes in 1. Hyvärinen showed that via integration by parts (under suitable boundary conditions), the objective (equation \ref{eq:2}) can be rewritten in a form only involving the model’s parameters:

\[\begin{align} \mathcal{L}_{\text{SM}}(\theta) & = \mathbb{E}_{x \sim p_{\text{data}}} \left[ \frac{1}{2} \| s_\theta(x) \|^2 + \nabla_x \cdot s_\theta(x) \right] \\[10pt] & \approx \frac{1}{N}\sum_{i=1}^{N} \left[ \frac{1}{2} \| s_\theta(x_i) \|^2 + \nabla_x \cdot s_\theta(x_i) \right] \end{align}\]where $\nabla_x \cdot s_\theta(x) = {\text{trace}(\nabla_x s_\theta(x))}$ is the divergence of the score field. However, SM is not scalable especially for high-dimension data points, because the second term is the jacobin of score model.

For this purpose, Vincent introduced denoising score matching (DSM) 2 , by adding Gaussian noise to the real data $x$, the score model becomes predicting the score field of noised data $\tilde{x} = x + \sigma$.

\[s_{\theta}(\tilde{x}, \sigma) \approx \nabla_{\tilde{x}} \log p_{\sigma}(\tilde{x})\label{eq:5}\]where $p_{\sigma}$ is the data distribution convolved with Gaussian noise of scale $\sigma$, it is easy to verify that predicting $\nabla_{\tilde x} \log p_{\sigma}(\tilde x)$ is equivalent to predict $\nabla_{\tilde{x}} \log p_{\sigma}(\tilde{x} \mid x)$.

\[\begin{align} & \mathbb{E}_{\tilde{x} \sim p_{\sigma}(\tilde{x})}\left[\big\|\,s_{\theta}(\tilde{x}, \sigma) - \nabla_{\tilde x} \log p_{\sigma}(\tilde x)\,\big\|_2^2\right]\label{eq:6} \\[10pt] = \quad & \mathbb{E}_{x \sim p_{\text{data}}(x)}\mathbb{E}_{\tilde{x} \sim p_{\sigma}(\tilde{x} \mid x)}\left[\big\|\,s_{\theta}(\tilde{x}, \sigma) - \nabla_{\tilde{x}} \log p_{\sigma}(\tilde{x} \mid x)\,\big\|_2^2\right] + \text{const}\label{eq:7} \end{align}\]When $\sigma$ is small enough, $p_{\text{data}}(x) \approx p_\sigma(\tilde x)$. Optimizing Formula \ref{eq:7} does not require knowing the true data distribution, while also avoiding the computation of the complex Jacobian determinant.

\[\begin{align} \nabla_{\tilde{x}} \log p_{\sigma}(\tilde{x} \mid x) & = \nabla_{\tilde{x}} \log \frac{1}{\sqrt{2\pi}\sigma} {\exp}\left( -\frac{(\tilde{x}-x)^2}{2\sigma^2}\right) = -\frac{(\tilde{x}-x)}{\sigma^2} \end{align}\]4.1.3 NCSN and Annealed Langevin Dynamics

The first major practical realization of this idea was the Noise-Conditional Score Network (NCSN) 3. However, the authors found that the generated samples by solving \ref{eq:7} with a single small $ \sigma $ are of poor quality, the core issue is that real-world data often lies on a complex, low-dimensional manifold (high-density areas), in the vast empty spaces between data points, the score is ill-defined and difficult to estimate (low-density areas), so low-density regions are underrepresented, leading to poor generalization by the network in those areas. Their solution was ingenious: perturb the data with Gaussian noise of varying magnitudes $\sigma$ ($ \sigma_1 > \sigma_2 > \dots > \sigma_K > 0 $).

Large $ \sigma_i $: The distribution is highly smoothed, covering the global space with small, stable scores in low-density areas (easier to learn).

Small $ \sigma_i $: Focuses on local refinement, closer to $ p_{\text{data}} $.

The loss function now can be rewritten as:

\[\mathcal{L}(\theta) = \mathbb{E}_{\sigma \sim \mathcal{D}}\mathbb{E}_{x \sim p_{\text data}(x)}\mathbb{E}_{\tilde{x} \sim p_{\sigma}(\tilde{x} \mid x)}\left[\big\|\,s_{\theta}(\tilde{x}, \sigma) - \nabla_{\tilde{x}} \log p_{\sigma}(\tilde{x} \mid x)\,\big\|_2^2\right]\label{eq:8}\]With $\lambda{(\sigma)}$ balancing gradients across noise scales and $\mathcal{D}$ is a distribution over $\sigma$. To generate a sample, NCSN employed Annealed Langevin Dynamics (ALD) 3. LD 4 5 treats sampling as stochastic gradient ascent on log-density with Gaussian noise injected to guarantees to converge to the target distribution. ALD is an iterative sampling process:

Start with a sample drawn from pure noise, corresponding to a very high noise level $\sigma_{\text large}$.

Iteratively update the sample using the Langevin dynamics equation:

\[x_{i+1} \leftarrow x_i + \alpha s_{\theta}(x_i, \sigma_i) + \sqrt{2\alpha}z_i\]where $\alpha$ is a step size and $z_i$ is fresh Gaussian noise. This update consists of a “climb” along the score-gradient and a small injection of noise to encourage exploration.

Gradually decrease (or “anneal”) the noise level $\sigma_i$ from large to small. This process is analogous to starting with a blurry, high-level structure and progressively refining it with finer details until a clean sample emerges.

4.1.4 Historical Limitations and Enduring Legacy

The NCSN approach was groundbreaking, but it had its limitations. The Langevin sampling process was inherently stochastic due to the injected noise $z$ and slow, requiring many small steps to ensure stability and quality. The annealing schedule itself was often heuristic.

However, its legacy is profound. NCSN established the score function as a fundamental quantity for generative modeling and introduced the critical technique of conditioning on noise levels. It provided the continuous-space intuition that would become indispensable for later theoretical breakthroughs.

4.2 The Discrete-Time Perspective: Denoising Diffusion Probabilistic Models

Running parallel to the score-based work, a second paradigm emerged, built upon the more structured and mathematically elegant framework of Markov chains.

4.2.1 The Core Idea: An Elegant Markov Chain

Independently, Ho et al. 6 proposed a probabilistic approach that traded continuous dynamics for a discrete, analytically tractable Markov chain. Instead of estimating scores directly, DDPM proposed a fixed, two-part process.

Forward (Diffusion) Process: Start with a clean data sample $x_0$. Gradually add a small amount of Gaussian noise at each discrete timestep $t$ over a large number of steps $T$ (typically 1000). This defines a Markov chain $x_0, x_1, \dots, x_T$ where $x_T$ is almost indistinguishable from pure Gaussian noise. This forward process, $q(x_t \mid x_{t-1})$, is fixed and requires no learning.

\[q(x_t \mid x_{t-1}) = \mathcal{N}(x_t;\,\sqrt{\alpha_t}x_{t-1}, (1-\alpha_t)I)\]Reverse (Denoising) Process: Given a noisy sample $x_t$, how do we predict the slightly less noisy $x_{t-1}$? The goal is to learn a reverse model $p_{\theta}(x_{t-1} \mid x_t)$ to inverts this chain.

4.2.2 Forward Diffusion and Reverse Denoising

According to previous post, the solution of DDPM aims to maximize the log-likelihood of $p_{\text data}(x_0)$, which can be reduced to maximizing the variational lower bound, and maximizing the variational lower bound can further be derived as minimizing the KL divergence between $p_{\theta}(x_{t-1} \mid x_t)$ and $q(x_{t-1} \mid x_t, x_0)$.

\[\mathcal{L}_\text{ELBO} = \mathbb{E}_q \left[ \log p_\theta(x_0 \mid x_1) - \log \frac{q(x_{T} \mid x_0)}{p_\theta(x_{T})} - \sum_{t=2}^T \log \frac{q(x_{t-1} \mid x_t, x_0)}{p_\theta(x_{t-1} \mid x_t)} \right]\]The true posterior $q(x_{t-1} \mid x_t, x_0)$ can be computed using Bayes’ rule and the Markov property of the forward chain:

\[q(x_{t-1} \mid x_t, x_0) = \frac{q(x_t \mid x_{t-1}) q(x_{t-1} \mid x_0)}{q(x_t \mid x_0)}\]Since all three terms in the right sides are Gaussian distribution, the posterior $ q(x_{t-1} \mid x_t, x_0) $ is also Gaussian:

\[q(x_{t-1} \mid x_t, x_0) = \mathcal{N}\left( x_{t-1}; \tilde{\mu}_t(x_t, x_0), \tilde{\beta}_t I \right)\]with mean and and variance:

\[\tilde{\mu}_t(x_t, x_0) = \frac{1}{\sqrt{\alpha_t}} \left( x_t - \frac{\beta_t}{\sqrt{1 - \bar{\alpha}_t}} \epsilon \right) \quad , \quad \tilde{\beta}_t = \frac{(1 - \bar{\alpha}_{t-1}) \beta_t}{1 - \bar{\alpha}_t}\label{eq:14}\]Since our goal is to approximate distribution $ p_{\theta}(x_{t-1} \mid x_t) $ using distribution $q(x_{t-1} \mid x_t, x_0)$, this means that our reverse denoising process must also follow a Gaussian distribution, with the same mean and variance. Because the added noise in the reverse process is unknown, the training objective of DDPM is to predict this noise. We use $\epsilon_{\theta}(x_t, t)$ to represent the predicted noise output, substituting $ \epsilon $ with the noise predicted $\epsilon_{\theta}(x_t, t)$:

\[p_{\theta}(x_{t-1} \mid x_t) = \mathcal{N}\left( x_{t-1}; {\mu}_{\theta}(x_t, x_0), {\beta}_{\theta} I \right)\]where:

\[{\mu}_{\theta}(x_t, x_0) = \frac{1}{\sqrt{\alpha_t}} \left( x_t - \frac{\beta_t}{\sqrt{1 - \bar{\alpha}_t}} \epsilon_\theta(x_t, t) \right) \ \ , \ \ {\beta}_{\theta}=\tilde{\beta}_t = \frac{(1 - \bar{\alpha}_{t-1}) \beta_t}{1 - \bar{\alpha}_t}\]Sampling is the direct inverse of the forward process. One starts with pure noise $x_T \sim \mathcal{N}(0, I)$ and iteratively applies the learned reverse step for $ t = T, T-1, \dots, 1$, using the noise prediction $\epsilon_{\theta}(x_t, t)$ at each step to denoise the sample until a clean $x_0$ is obtained.

\[x_{t-1} = \frac{1}{\sqrt{\alpha_t}} \left( x_t - \frac{\beta_t}{\sqrt{1 - \bar{\alpha}_t}} \epsilon_\theta(x_t, t) \right) + \sqrt{\frac{(1 - \bar{\alpha}_{t-1}) \beta_t}{1 - \bar{\alpha}_t}} \epsilon\]4.2.3 Theoretical Elegance and Practical Bottleneck

DDPMs offered a strong theoretical foundation, stable training, and state-of-the-art sample quality. However, this came at a steep price: the curse of a thousand steps. The model’s theoretical underpinnings relied on the Markovian assumption and small step sizes, forcing the sampling process to be painstakingly slow and computationally expensive.

4.3 The Initial Convergence: Tying the Two Worlds Together

For a time, these two paradigms—one estimating a continuous gradient field, the other reversing a discrete noise schedule—seemed distinct. Yet, a profound and simple connection lay just beneath the surface. It was shown that the two seemingly different objectives were, in fact, two sides of the same coin.

The score function $s(x_t, t)$ at a given noise level is directly proportional to the optimal noise prediction $\epsilon(x_t, t)$:

\[s_{\theta}(x_t, t) = \nabla_{x_t} \log p(x_t) = -\frac{\epsilon_{\theta}(x_t, t)} { \sigma_t}\]where $\sigma_t$ is the standard deviation of the noise at time $t$.

This equivalence is beautiful. Predicting the noise is mathematically equivalent to estimating the score. The score-based view provides the physical intuition of climbing a probability landscape, while the DDPM view provides a stable training objective and a concrete, discrete-time mechanism.

With this link established, the two parallel streams began to merge into a single, powerful river. This convergence set the stage for the next major leap: breaking free from the rigid, one-step-at-a-time sampling of DDPM, and ultimately, the development of a unified SDE/ODE theory that could explain and improve upon both.

Part II — Fast Sampling without Retraining

Inference-time acceleration (training-free) treats a pretrained diffusion/score model as a fixed oracle and speeds up generation solely by changing the sampler—the time discretization, update rule, and numerical integration strategy—without modifying parameters or re-running training. Under this paradigm, we keep the same network evaluations \(f_\theta(x_t, t, c)\) but reduce the number of required evaluations (NFE) and improve stability by exploiting better discretizations (e.g., deterministic DDIM-style updates), multistep “pseudo-numerical” schemes (e.g., PNDM/PLMS), and higher-order ODE/SDE solvers tailored to diffusion dynamics (e.g., DEIS, DPM-Solver(++), UniPC).

Conceptually, these methods replace a long sequence of small Euler-like steps with fewer, more accurate steps by injecting local polynomial/exponential approximations, error-correcting history terms, or probability-flow–consistent updates. As a result, they offer a plug-and-play speed–quality trade-off: the model and training pipeline remain unchanged, and faster sampling is achieved purely through improved inference-time computation.

5. Breaking the Markovian Chain with DDIM

The stunning quality of DDPMs came at a steep price: the rigid, step-by-step Markovian chain. This constraint, while theoretically elegant, was a practical nightmare, demanding a thousand or more sequential model evaluations for a single image. The field desperately needed a way to accelerate this process without a catastrophic loss in quality. The answer came in the form of Denoising Diffusion Implicit Models (DDIM) 7, a clever reformulation that fundamentally altered the generation process.

5.1 From Stochastic Denoising to Deterministic Prediction

The core limitation of the DDPM sampler lies in its definition of the reverse process, which models $p_{\theta}(x_{t-1} \mid x_t)$. This conditional probability is what creates the strict, one-step-at-a-time dependency.

The DDIM paper posed a brilliant question: what if we don’t try to predict $x_{t-1}$ directly? What if, instead, we use our noise-prediction network $\epsilon_{\theta}(x_t, t)$ to make a direct guess at the final, clean image $x_0$? This is surprisingly straightforward. Given $x_t$ and the predicted noise $\epsilon_{\theta}(x_t, t)$, we can rearrange the forward process formula to solve for an estimated $x_0$, which we’ll call $\hat{x}_0$:

\[\hat{x}_0 = \frac{(x_t - \sqrt{1 - \bar{\alpha}_t} \, \epsilon_{\theta}(x_t, t))}{\sqrt{\bar{\alpha}_t}}\]This single equation is the conceptual heart of DDIM. By first estimating the final destination $x_0$, we are no longer bound by the previous step. We have a “map” that points from our current noisy location $x_t$ directly to the origin of the trajectory. This frees us from the Markovian assumption and opens the door to a much more flexible generation process.

5.2 The Freedom to Jump: Non-Markovian Skip-Step Sampling

With an estimate of pred \(\hat{x}_0\) in hand, DDIM constructs the next sample $x_{t-1}$ in a completely different way. It essentially says: “Let’s construct $x_{t-1}$ as a weighted average of three components”:

The “Clean Image” Component: The estimated final image predicted $\hat x_0$, pointing towards the data manifold.

The “Noise Direction” Component: The direction pointing from the clean image $x_0$ back to the current noisy sample $x_t$, represented by $\epsilon_{\theta}(x_t, t)$.

A Controllable Noise Component: An optional injection of fresh random noise.

The DDIM update equation is as follows:

\[x_{t-1} = \sqrt{\bar \alpha_{t-1}}\,{\hat x_0} + \sqrt{1-\bar \alpha_{t-1}-\sigma_t^2}\, \epsilon_{\theta}(x_t, t) + \sigma_t \, z_t\label{eq:20}\]Here, \(z_t\) is random noise, and $\sigma_t$ is a new hyperparameter that controls the amount of stochasticity. $\sigma_t = \eta \tilde{\beta}_t$, where $\tilde{\beta}_t$ is defined in DDPM (Equation \ref{eq:14})

This formulation \ref{eq:20} is non-Markovian because the calculation of $x_{t-1}$ explicitly depends on predicted $x_0$, which is an estimate of the trajectory’s origin, not just the immediately preceding state $x_t$. Because this process is no longer Markovian, the “previous” step doesn’t have to be $t-1$. We can choose an arbitrary subsequence of timesteps from the original $1, \dots, T$, for example, $1000, 980, 960, …, 20, 0$. Instead of taking 1000 small steps, we can now take 50 large jumps. At each jump from $t$ to a much earlier $s < t$, the model predicts $x_0$ and then uses the DDIM update rule to deterministically interpolate the correct point $x_s$ on the trajectory. This “skip-step” capability was a game-changer. It allowed users to drastically reduce the number of function evaluations (NFEs) from 1000 down to 50, 20, or even fewer, providing a massive speedup with only a minor degradation in image quality.

Formula \ref{eq:20} also reveals an important theory: for any $x_{t-1}$, the marginal probability density obtained through DDIM sampling is consistent with the marginal probability density of DDPM.

\[q(x_{t-1} \mid x_0 ) = \mathcal{N}(x_{t-1}; \sqrt{\bar{\alpha}_{t-1}} {x_0}, (1-\bar{\alpha}_{t-1})I)\]This is why DDIM can take large jumps and still produce high-quality images using a model trained for the one-step DDPM process.

5.3 The $\eta$ Parameter: A Dial for Randomness

DDIM introduced another powerful feature: a parameter denoted as $\eta$ that explicitly controls the stochasticity of the sampling process.

$\eta = 0$: (Deterministic DDIM): When $\eta = 0$, the random noise term in the update rule is eliminated. For a given initial noise $x_T$, the sampling process will always produce the exact same final image

x_0. This is the “implicit” in DDIM’s name, as it defines a deterministic generative process. This property is incredibly useful for tasks that require reproducibility or image manipulation (like SDEdit), as the generation path is fixed.$\eta = 1$ (Stochastic DDIM): When $\eta = 1$, DDIM adds a specific amount of stochastic noise at each step. It was shown that this choice makes the marginal distributions $p(x_t)$ match those of the original DDPM sampler. It behaves much like DDPM, offering greater sample diversity at the cost of reproducibility.

$0 < \eta < 1$ (Hybrid Sampling): Values between 0 and 1 provide a smooth interpolation between a purely deterministic path and a fully stochastic one, giving practitioners a convenient “dial” to trade off between diversity and consistency.

5.4 Bridging to a Deeper Theory: The Lingering Question

DDIM was a massive practical leap forward. It provided the speed that was desperately needed and introduced the fascinating concept of a deterministic generative path. However, its derivation, while mathematically sound, was rooted in the discrete-time formulation of DDPM. The deterministic path ($\eta=0$) worked exceptionally well, but it raised a profound theoretical question:

What is this deterministic path? If a continuous process underlies generation, can we describe this path more fundamentally?

The success of deterministic DDIM strongly hinted that the stochasticity of Langevin Dynamics and DDPM sampling might not be strictly necessary. It suggested the existence of a more fundamental, deterministic flow from noise to data. Explaining the origin and nature of this flow was the next great challenge.

This question sets the stage for our next chapter, where we will discover the grand, unifying framework of Stochastic and Ordinary Differential Equations (SDEs/ODEs), which not only provides the definitive answer but also reveals that Score-Based Models and DDPMs were two sides of the same mathematical coin all along.

6. The Unifying Framework of SDEs and ODEs

The insight behind DDIM—that sampling can follow a deterministic path derived from a learned vector field—opens the door to a more general formulation: diffusion models can be expressed as continuous-time stochastic or deterministic processes. In this chapter, we formalize that insight by introducing the Stochastic Differential Equation (SDE) and the equivalent Probability Flow Ordinary Differential Equation (ODE) perspectives. This unified view provides the theoretical bedrock for nearly all subsequent developments in diffusion sampling, including adaptive solvers, distillation, and consistency models.

6.1 From Discrete Steps to Continuous Time

The core insight is to re-imagine the diffusion process not as $T$ discrete steps, but as a continuous process evolving over a time interval, say $t \in [0, T]$. In this view, $x_0$ is the clean data at $t=0$, and $x_T$ is pure noise at $t=T$. The forward process is no longer a chain but a trajectory governed by a Stochastic Differential Equation (SDE) that continuously injects noise.

\[dx_t=f(x_t, t)dt+g(t)dw_t\]Where $f(x_t, t)$ is a drift term, which describes the deterministic part of the SDE’s evolution. $g(t)$ is a diffusion coefficient, which scales the magnitude of the random noise. $dw_t$ is a standard Wiener process, $dw_t=\sqrt{dt}\epsilon,\,\epsilon \sim \mathcal{N}(0, I)$.

This continuous formulation is incredibly powerful because it allows us to leverage the mature and rigorous mathematics of stochastic calculus. More importantly, it was proven by Song et al. in their seminal work that there exists a corresponding reverse-time SDE that can transform pure noise at $t=T$ back into data at $t=0$. This reverse SDE is the true, continuous-time “master equation” for generation.

\[dx_t = \left[ f(x_t, t) - g(t)^2 s_{\theta}(x_t, t) \right] dt + g(t) d{\bar w}_t\]Where $s_{\theta}(x_t, t)$ is the score function, learned by the neural network. $d{\bar w}_t$ An infinitesimal “kick” from a standard Wiener process (random noise).

This single equation serves as the grand unifier for the stochastic samplers from section 2. Both Annealed Langevin Dynamics (from NCSN) and the DDPM sampling procedure can be shown to be different numerical discretization schemes for this exact same SDE. They were, all along, just two different ways to approximate the solution to one underlying continuous process.

6.2 The Probability Flow ODE: Discovering the Deterministic Path

The reverse SDE provides a complete picture for stochastic generation, but what about the deterministic path discovered by DDIM? This is where the second part of the unified theory comes into play.

For any given SDE that describes the evolution of individual random trajectories, there exists a corresponding Ordinary Differential Equation (ODE) that describes the evolution of the probability density of those trajectories. This is known as the Probability Flow (PF) ODE. While the SDE traces a jagged, random path, the PF-ODE traces a smooth, deterministic “flow” of probability from the noise distribution to the data distribution.

The PF-ODE corresponding to the reverse SDE is:

\[dx_t = \left[ f(x_t, t) - \frac{g(t)^2}{2} s_{\theta}(x_t, t) \right] dt\]Notice the two critical differences from the SDE:

- The diffusion term is gone. There is no more stochastic noise injection. The process is entirely deterministic.

- The score term $s_{\theta}$ is scaled by $\frac{1}{2}$. This factor arises directly from the mathematical conversion (via the Fokker-Planck equation) from the SDE to its deterministic counterpart.

This ODE is the definitive answer to the puzzle of DDIM. The deterministic path ($\eta = 0$) that DDIM so effectively approximates is, in fact, a trajectory of this very Probability Flow ODE. The DDIM update rule is a specific (and quite effective) numerical solver for this equation.

6.3 A New Paradigm for Sampling

The SDE/ODE framework marks a moment of theoretical completion and a paradigm shift. We now have a single, coherent view that elegantly encompasses all previous methods:

This new perspective immediately begs the next question. The world of numerical analysis has spent over a century developing sophisticated methods for solving ODEs—from simple Euler methods to high-order Runge-Kutta schemes. Can we simply apply these off-the-shelf, classical solvers to the PF-ODE and achieve even greater speed and accuracy?

As we will see in the next chapter, the answer is surprisingly complex. The unique properties of the diffusion PF-ODE present significant challenges to standard numerical methods, necessitating the development of a new class of specialized, “diffusion-aware” solvers.

7. High-Order PF-ODE Solver in Diffusion Models

The transition from stochastic sampling (via SDEs) to deterministic sampling (via the Probability Flow ODE) transformed diffusion generation into a numerical integration problem. Once the evolution of data can be written as an ODE,

\[\frac{d\mathbf{x}}{dt} = \mathbf{f}(t,\mathbf{x}) - \frac{g^2(t)}{2}\, s_\theta(\mathbf{x}, t),\]the task of generating a sample becomes equivalent to integrating this equation backward in time, from random noise at $t = T$ to the data manifold at $t = 0$.

At first glance, this seems to open the door to the rich ecosystem of traditional numerical solvers, such as Euler, Heun, Runge–Kutta, Adams–Bashforth, and so on. However, diffusion sampling presents unique challenges that make these classical algorithms inadequate without adaptation.

7.1 Why Classical ODE Solvers Fall Short

The PF-ODE is not a conventional physics or engineering system but a learned dynamical system defined by a neural network. Classical solvers assume smooth, analytic derivatives and prioritize path fidelity—ensuring each intermediate step closely matches the true trajectory. Diffusion sampling, by contrast, only requires endpoint fidelity: as long as the final state $x_0$ lies on the data manifold, large intermediate deviations are acceptable.

Moreover, evaluating the derivative $\mathbf{f}(t,\mathbf{x})$ involves a full forward pass of a deep neural network (often a U-Net), making every function evaluation costly. High-order solvers that need multiple evaluations per step rapidly become inefficient.

Compounding this, diffusion dynamics are stiff—different dimensions evolve at dramatically different rates—and the learned score field $s_\theta$ is only an approximation, introducing noise and bias that can destabilize high-order methods. These characteristics make standard numerical integration theory ill-suited to the diffusion context.

7.2 The Rise of Specialized Solvers

These difficulties led to a new family of diffusion-aware numerical solvers, designed specifically to balance accuracy, stability, and computational budget. Instead of preserving local precision at every point, these methods explicitly optimize for global fidelity of the terminal sample under limited NFEs (number of function evaluations).

This insight underpins the design of solvers such as DPM-Solver, DPM-Solver++, DEIS, UniPC, and many others, which adapt principles from exponential integrators, predictor–corrector frameworks, and adaptive step-size control to the peculiarities of PF-ODE dynamics. Collectively, they represent a crucial bridge between differential-equation theory and practical, efficient diffusion sampling.

Because this topic encompasses substantial theoretical and algorithmic depth—including solver derivations, stability analyses, and empirical comparisons—it is discussed separately in a dedicated article. For the complete treatment of high-order PF-ODE solvers, please refer to the following

Part III — Distillation for Fast Sampling

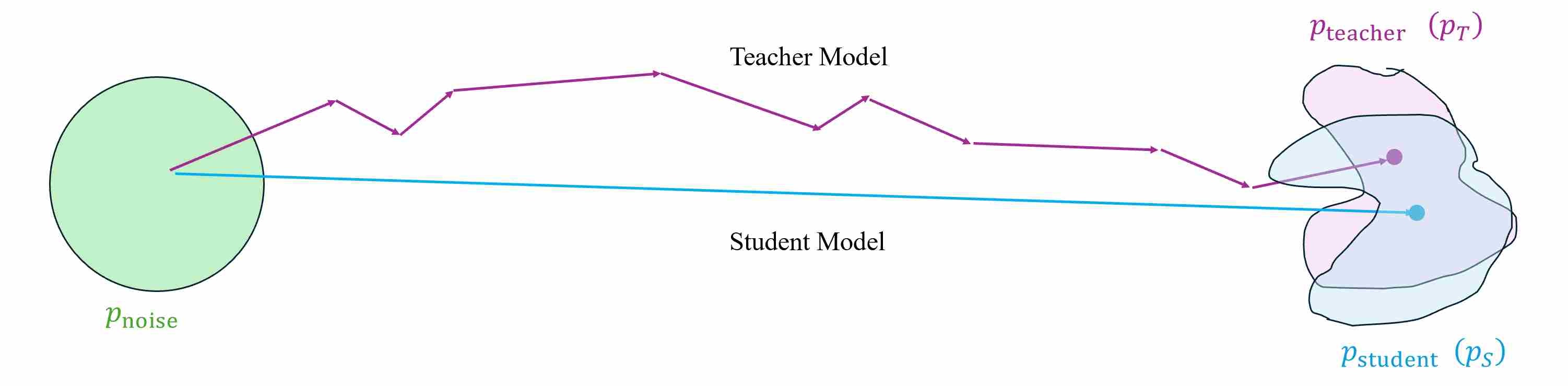

Although higher-order ODE solvers (e.g., DPM-Solver++, UniPC) can reduce the number of denoising steps from hundreds to fewer than twenty, the computational cost remains substantial for high-resolution image synthesis and real-time applications. The ultimate goal of sampling acceleration is to achieve few-step or even single-step generation without sacrificing fidelity or diversity.

Distillation-based approaches address this challenge by compressing the multi-step diffusion process into a student model that can approximate the teacher’s distribution within only one or a few evaluations. Unlike numerical solvers, distillation learns a direct functional mapping from noise to data, thereby converting iterative denoising into an amortized process.

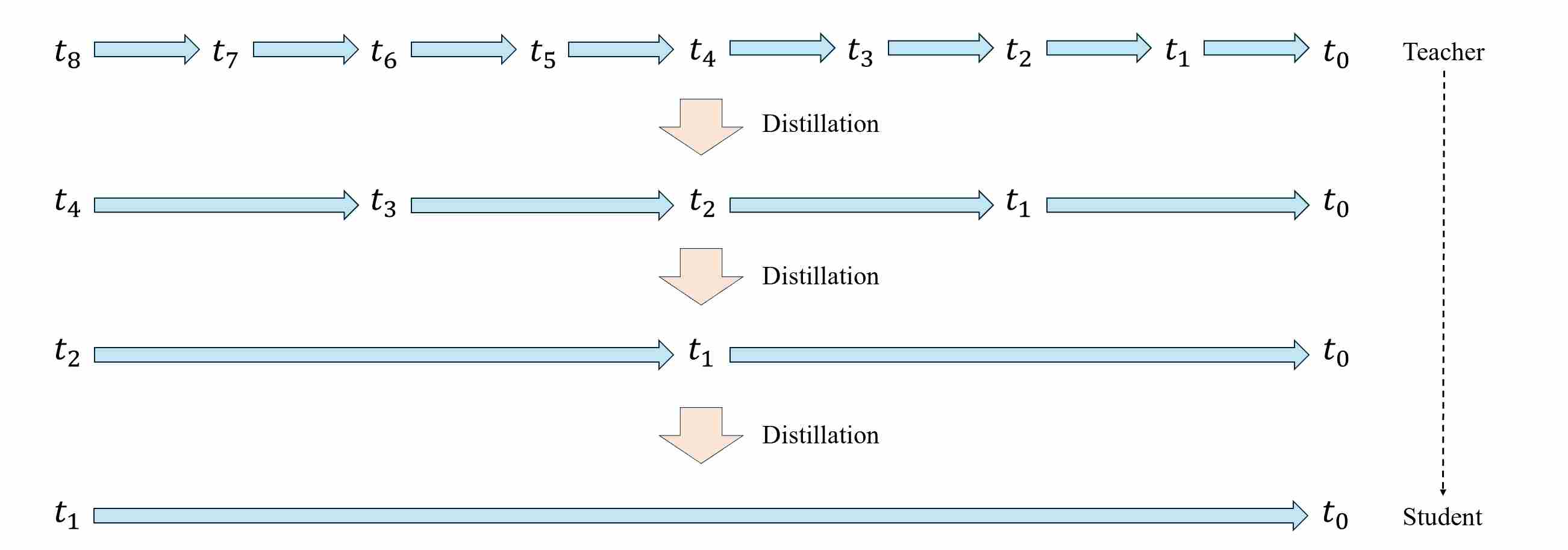

8. Trajectory-Based Distillation

Trajectory distillation is built on the principle distill how the teacher moves. Instead of matching only final samples, it compresses the teacher’s multi-step sampler into fewer, larger steps by teaching the student to reproduce the teacher’s transition operator \(x_{t_i}!\mapsto x_{t_{j}}\) (or an entire short segment of the trajectory).

The design philosophy is solver-centric: sampling is an algorithm, and distillation is a way to learn an approximate integrator / flow map that preserves the teacher’s path properties (stability, guidance behavior, error cancellation) under reduced NFE. Practically, this appears as step-halving curricula, multi-step-to-single-step regression targets, or direct learning of coarse-grained transitions.

8.1 Progressive Distillation

Progressive Distillation (PD) 8 compresses a pretrained “teacher” diffusion sampler that runs in $T$ steps into a “student” sampler that runs in $T/2$ steps, then recursively repeats the procedure \((T/2 \rightarrow T/4 \rightarrow \dots)\), typically reaching 4–8 steps with minimal quality loss.

Let \(\{t_n\}_{n=0}^{N}\) be a decreasing time/$\sigma$ grid with $t_0=0$ and $t_N=T$. Denote the teacher’s one-step transition (DDIM/ODE or DDPM/SDE) by

\[x_{t_{n-1}}^{\text{T}}=\Phi_\theta(x_{t_n};\,t_n \to t_{n-1}),\]where $\Phi_\theta$ is an ODE Solver. Its two-step composite

\[x_{t_{n-2}}^{\text{T}} = \Phi_\theta^{(2)}(x_{t_n};\,t_n \to t_{n-2}) = \Phi_\theta\big(\,\Phi_\theta(x_{t_n};\, t_n \to t_{n-1});\,t_{n-1}\to t_{n-2}\big).\]A student $f$ with parameters $\phi$ is trained to replace two teacher steps with one:

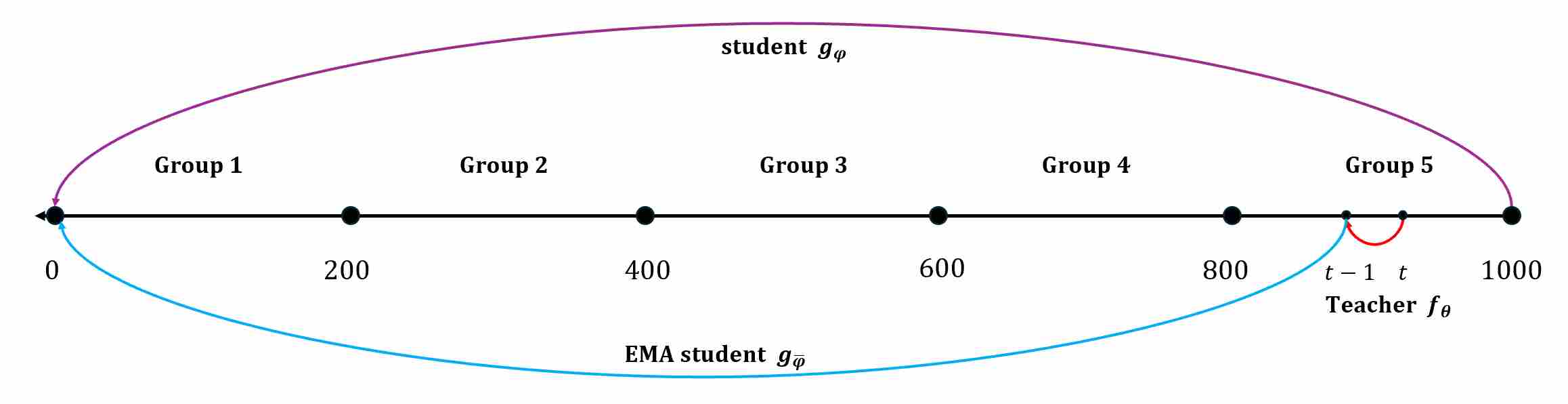

\[x_{t_{n-1}}^{\text{S}} = f_\phi(x_{t_n};\,t_n \to t_{n-1}) \approx \Phi_\theta^{(2)}(x_{t_n};\,t_n \to t_{n-2})\]A visualization of progressive distillation algorithm is shown as follows.

8.1.1 Target Construction and Loss Design

Training pairs are self-supervised by the teacher: Sample $x_{t_n}$ either from $\mathcal N(0,I)$ or by forward noising data \(x_0\sim p_{\text{data}}\) through \(q(x_{t_n} \mid x_0)\). Then, run two teacher steps to get the target state

\[x_{t_{n-2}}^{\text{T}}=\Phi_\theta^{(2)}(x_{t_n};\,t_n\to t_{n-2}) = x_{t_n} + \underbrace{\Delta^{(2)}_\theta(x_{t_n};\,t_n \to t_{n-2})}_{\text{Integral part from} \ t_{n} \text{ to } t_{n-2}}.\]PD can utilize different targets that we expect the student model to predict in one step from $x_t$, each corresponding to a distinct loss function. $x_{t_{n-2}}^{\text{T}$ is a simple and straightforward target that we expect the student model to predict, with this as the goal, we introduce the PD regression loss.

\[\mathcal L_{\text{state}} = \mathbb E_{x_{t_n}} \big\| f_\phi(x_{t_n};\,t_n\to t_{n-1}) - x_{t_{n-2}}^{\text{T}} \big\|_2^2\]8.1.2 Strengths and Limitations

Progressive Distillation offers an elegant and empirically robust pathway toward accelerating diffusion sampling. Its main strengths lie in its stability, modularity, and data efficiency.

- Data-free (or data-light): supervision comes from the teacher sampler itself.

- Stable and modular: each stage is a local two-step merge problem.

- Excellent trade-off at 4–8 steps: large speedups with negligible FID/IS degradation.

- Architecture-agnostic: applies to UNet/DiT backbones and both pixel/latent spaces.

However, PD also faces fundamental limitations.

Produce blurry and over-smoothed output: The MSE loss minimizes the squared difference between the prediction and the target, which mathematically drives the optimal solution toward the conditional mean of all possible teacher outputs.

- One-step limit is hard: local two-step regression accumulates errors when composed; texture/high-freq details degrade.

- Teacher-binding: student quality inherits teacher biases (guidance scale, scheduler, failure modes).

- Compute overhead: multiple PD stages plus teacher inference can approach original pretraining cost (though still typically cheaper).

8.1.3 A Canonical Training Algorithm (Template)

Below is a minimal, implementation-oriented template that captures the core algorithmic skeleton independent of specific papers.

# Progressive Distillation (PD) — 2× compression per phase

#

# teacher_step: a K-step sampler transition using teacher model θ (DDIM / PF-ODE / solver)

# student_step: a single transition using student model φ but with stride-2 (t_i -> t_{i-2})

#

# Key idea:

# train student to match the *two-step composed teacher transition*.

def train_progressive_distillation(student, teacher, schedule, phases, optimizer):

"""

schedule: provides alpha(t), sigma(t), and a time grid {t_0=0 < ... < t_K=T}

phases: list of step counts, e.g., [K0, K0/2, K0/4, ..., 1]

"""

for K in phases:

# define teacher grid with K steps, student grid with K/2 steps (stride 2)

t_grid = schedule.make_time_grid(K) # descending: t_K -> ... -> t_0

for it in range(num_train_steps_per_phase):

# 1) sample data/noise and a random starting index n (need at least 2 teacher steps)

x0 = sample_data()

eps = sample_gaussian_like(x0)

n = random_int(low=2, high=K) # choose t_n

# 2) form x_{t_n} by forward diffusion

t_n = t_grid[n]

x_tn = schedule.alpha(t_n) * x0 + schedule.sigma(t_n) * eps

# 3) teacher: two-step composite transition t_n -> t_{n-1} -> t_{n-2}

with no_grad():

t_n1 = t_grid[n - 1]

t_n2 = t_grid[n - 2]

x_tn1_T = teacher_step(teacher, x_tn, t_n, t_n1, schedule) # 1 step

x_tn2_T = teacher_step(teacher, x_tn1_T, t_n1, t_n2, schedule) # 2nd step

# 4) student: one stride-2 transition t_n -> t_{n-2}

x_tn2_S = student_step(student, x_tn, t_n, t_n2, schedule)

# 5) distillation loss (L2/L1/LPIPS)

L = distance(x_tn2_S, stopgrad(x_tn2_T))

optimizer.zero_grad()

L.backward()

optimizer.step()

# 6) after phase converges: replace teacher with student (optional)

teacher = copy_as_teacher(student) # or EMA(student)

Inference (Fast Generation). After progressive distillation, generation uses only the distilled student denoiser, but with a much shorter time grid. Concretely, sample an initial noise \(x_T\sim\mathcal N(0,I)\) and run the same deterministic sampler form (e.g., DDIM / PF-ODE style update) for \(K\) steps, where \(K\) is the final distilled step budget (often obtained by repeatedly halving the number of steps during training). No teacher evaluations are required at test time; the acceleration comes purely from replacing a long trajectory with a small number of coarse, student-calibrated updates.

8.2 Transitive Closure Time-Distillation

TRACT (Transitive Closure Time-Distillation) is a trajectory distillation method that generalizes binary (2-step) distillation to S-step groups, so that a teacher sampler with $T$ steps can be reduced to $T/S$ steps in one phase, and only a small number of phases (typically 2–3) are needed to reach very small step counts. 9

8.2.1 Core Idea: From Binary Merge to Transitive Closure

In a single distillation phase, the teacher schedule \(\{t_0, t_1, \dots, t_T\}\) is partitioned into contiguous groups of size $S$. Within a group, TRACT asks the student to “jump” from any time in the group directly to the group end.

Concretely, pick a group boundary $(t_{k}, t_{k-S})$ (sampling runs backward), and consider any intermediate $t_{k-i}$ with $i \in {0,\dots,S-1}$. We define:

Teacher one-step transition (e.g., DDIM / ODE step):

\[x_{t_{k-i-1}}^{\mathrm T} = f_{\theta}(x_{t_{k-i}};\,t_{k-i}\to t_{k-i-1}).\]Student long jump transition (same network, but larger time stride):

\[x_{t_{k-S}}^{\mathrm S} = g_{\varphi}(x_{t_{k-i}};\,t_{k-i}\to t_{k-S}).\]

If we naively enforced the “jump-from-anywhere” constraint, training would require multiple student calls per example (one for each possible $t_{k-i}$), which is expensive.

\[g_\varphi(x_{t_{k-i}};\,t_{k-i}\to t_{k-S})\;=\;f_{\theta}(\ldots f_{\theta}(x_{t_{k-i}};\,t_{k-i}\to t_{k-i-1})).\]TRACT resolves this by introducing a self-teacher $g_{\bar\varphi}$, an EMA of the student, and enforces transitive closure via a recurrence: the “long jump” from $t_{k-i}$ to $t_{k-S}$ is defined by composing

- One teacher step: one teacher step from $t_{k-i}$ to $t_{k-i-1}$ and

- Bootstrapping with EMA-student: one EMA-student jump from $t_{k-i-1}$ to $t_{k-S}$.

A simple way to write the training target state is:

If $i=S-1$ (already adjacent to the group end), the teacher can provide the endpoint directly:

\[x_{t_{k-S}}^{\mathrm T}=f_{\theta}(x_{t_{k-S+1}};\,t_{k-S+1}\to t_{k-S}).\]Otherwise (general case), use the self-teacher recurrence:

\[x_{t_{k-S}}^{\mathrm T} = g_{\bar\varphi}\Big( f_{\theta}(x_{t_{k-i}};\,t_{k-i}\to t_{k-i-1}); \,t_{k-i-1}\to t_{k-S}\Big).\]

8.2.2 Target Construction and Objective

Like PD/BTD, TRACT can be implemented with a signal-prediction (i.e., $\hat x_0$) network. The student network itself still takes the standard diffusion inputs $(x_t, t, c)$ and predicts $\hat x_0$; the “destination” $t_{k-S}$ is used only in the deterministic update (it selects the coefficients). 9

Let $x_t=\sqrt{\alpha_t}x_0+\sqrt{1-\alpha_t}\,\epsilon$ (VP parameterization), so during training we can compute the exact noise:

\[\epsilon = \frac{x_t-\sqrt{\alpha_t}\,x_0}{\sqrt{1-\alpha_t}}.\]Given the target endpoint state $x_{t_{k-S}}^{\mathrm T}$ (constructed above), we choose the target clean signal $x_0^{\star}$ so that a DDIM-style deterministic jump from $t$ to $t_{k-S}$ using the same $\epsilon$ reproduces $x_{t_{k-S}}^{\mathrm T}$:

\[x_0^{\star} = \frac{x_{t_{k-S}}^{\mathrm T} - \sqrt{1-\alpha_{t_{k-S}}}\,\epsilon}{\sqrt{\alpha_{t_{k-S}}}}.\]Then the student is trained by a standard regression (optionally with the usual DDIM/VP weighting):

\[\mathcal L_{\text{TRACT}} = \mathbb E\Big[ \lambda(t)\,\big\| \hat x_{0,\phi}(x_t,t,c) - x_0^{\star}\big\|_2^2 \Big].\]This view also clarifies a common confusion: TRACT does not require the model to explicitly take an endpoint $s$ as input. The network remains a standard $\hat x_0(x_t,t,c)$ predictor; the chosen stride (e.g., “jump to group end”) is realized by plugging $\hat x_0$ into a deterministic solver update with the corresponding $(\alpha_t,\alpha_s)$. 9

Important: why the target construction enforces $x_t$ and $x_s$ lie on the same DDIM trajectory

In TRACT, the supervision target is constructed under the DDIM (η = 0) trajectory model, where a trajectory is characterized by a shared latent noise ε (and a shared clean signal x₀) across time. Concretely, DDIM assumes that along a deterministic path we can write, for any two times t > s, $$ x_t = \sqrt{\gamma_t}\,x_0 + \sqrt{1-\gamma_t}\,\epsilon,\qquad x_s = \sqrt{\gamma_s}\,x_0 + \sqrt{1-\gamma_s}\,\epsilon, $$ with the same ε. TRACT then chooses the target x̂ (an x₀-prediction target) by eliminating ε and solving for x₀, yielding a closed-form x̂ that makes $(x_t, x_s)$ consistent with one DDIM trajectory. $$ \hat x = \frac{ x_s\sqrt{1-\gamma_t}-x_t\sqrt{1-\gamma_s} }{ \sqrt{\gamma_s}\sqrt{1-\gamma_t}-\sqrt{\gamma_t}\sqrt{1-\gamma_s} }, $$ The regression loss pushes the student $g_φ(x_t,t)$ toward this x̂; equivalently, it enforces that the inferred noise $$ \hat\epsilon=\frac{x_t-\sqrt{\gamma_t}\,g_\phi(x_t,t)}{\sqrt{1-\gamma_t}}, $$ is the same noise that reproduces x_s under DDIM reconstruction. Therefore, the target construction does not merely fit x₀ in isolation—it explicitly enforces that x_t and x_s share the same latent noise and hence lie on the same deterministic DDIM path.

8.2.3 TRACT vs. Progressive Distillation (Key Differences)

- Stride: PD enforces a fixed compression ratio of 2 per phase; TRACT uses an arbitrary group size $S$, enabling much larger stride per phase. 9

- Constraint form: PD matches a two-step teacher composition with a one-step student; TRACT enforces a transitive-closure recurrence over a group, so that jumps from interior points are consistent with jumps from later points. 9

- Teacher quality: PD’s “teacher of the next phase” is the previous student; TRACT’s recurrence uses an EMA self-teacher inside a phase to stabilize targets while avoiding many generations. 9

- Compute: With the recurrence, each training sample needs only one teacher step + one self-teacher jump, rather than multiple student evaluations across group positions. 9

8.2.4 A Canonical Training Algorithm (Template)

Below is a minimal, implementation-oriented template that captures the core algorithmic skeleton independent of specific papers.

# TRACT (Transitive Closure Time-Distillation)

# Inputs:

# teacher: The original pretrained model (frozen)

# student: The model being trained

# student_ema: EMA copy of the student (provides stable targets)

# S: Group size (striding factor)

for each training step:

# 1. Sample trajectory parameters

x0 ~ p_data(x)

# Sample a group boundary index k (e.g., t_k is the destination)

# Sample an intermediate point i within the group [k, k+S]

k ~ ...

i ~ Uniform({0, ..., S-1})

t_start = t_{k+i}

t_end = t_k

# 2. Forward diffusion to t_start

epsilon ~ N(0, I)

x_start = sqrt(alpha[t_start]) * x0 + sqrt(1 - alpha[t_start]) * epsilon

# 3. Construct Target (Transitive Closure)

with no_grad:

# a) Take ONE step with the frozen Teacher

# t_{k+i} -> t_{k+i-1}

x0_teach = teacher(x_start, t_start)

x_prev = DDIM_Step(x_start, x0_teach, t_start, t_{k+i-1})

# b) Bridge the rest with EMA Student

# t_{k+i-1} -> t_k

if i == 0:

# If we are already adjacent to target, teacher step is enough

x_target_state = x_prev

else:

# Otherwise, use EMA student to jump the gap

x0_ema = student_ema(x_prev, t_{k+i-1})

x_target_state = DDIM_Step(x_prev, x0_ema, t_{k+i-1}, t_end)

# c) Convert state target back to implied x0 target (using t_start noise definition)

# This ensures x_start and x_target_state lie on same DDIM trajectory

x0_target = Solve_x0_from_DDIM(x_start, x_target_state, t_start, t_end)

# 4. Student Prediction

pred_x0 = student(x_start, t_start)

# 5. Regression Loss

L = MSE(pred_x0, x0_target)

update(student, L)

update_ema(student_ema, student)

Inference (Fast Generation). Inference with TRACT is extremely efficient because the student has learned the “transitive closure” of the trajectory, enabling it to perform “long jumps” directly. For a 1-step distillation, you simply input Gaussian noise $x_T$ and the student outputs the clean sample $x_0$ immediately.

For multi-step variants (e.g., 2 steps), you follow the specific strided schedule determined by the group size $S$ during training (e.g., jumping from $T \to T-S, \dots, \to , \dots$ and then final $0$). This bypasses the need for the EMA self-teacher or the original teacher during inference.

8.3 Guided Distillation

So far, our discussion implicitly assumes an unguided diffusion model, where each sampling step requires one network evaluation. However, modern high-quality text-to-image systems heavily rely on classifier-free guidance (CFG), which doubles per-step cost by evaluating: one for a conditional model (\(\epsilon_{c}\), with prompt / class), and another for an unconditional model (\(\epsilon_{\phi}\), null prompt), then mixing them with a guidance scale.

\[\epsilon_{total} = \epsilon_{\phi} + g\cdot(\epsilon_{c} - \epsilon_{\phi} )\]This creates a second bottleneck orthogonal to “number of steps”: even if you distill \(N\!\to\!4\) steps, CFG still costs roughly $2\times 4$ forward passes. Guided distillation 10 tackles both axes:

distill “two-model guidance” into one student (remove the 2× factor),

then apply progressive / trajectory distillation to reduce steps further.

8.3.1 Stage-One: Distill the Guidance Operator

Let the teacher provide two predictions at time $t$: a conditional one \(\hat x^{c}_{\theta}(z_t)\) and an unconditional one \(\hat x_{\theta}(z_t)\). CFG combines them into a guided prediction at guidance strength $w$:

\[\hat x^{w}_{\theta}(z_t) = (1+w)\,\hat x^{c}_{\theta}(z_t) - w\,\hat x_{\theta}(z_t).\]The key idea is to train a single student \(\hat x_{\eta_1}(z_t, w)\) (also conditioned on context $c$) to directly regress this combined target for a range of $w$ values:

\[\min_{\eta_1}\ \mathbb E_{w\sim\mathcal U[w_{\min},w_{\max}],\ t\sim\mathcal U[0,1],\ x\sim p_{\text{data}}} \Big[ \omega(t)\ \big\|\hat x_{\eta_1}(z_t,w) - \hat x^{w}_{\theta}(z_t)\big\|_2^2 \Big].\]This removes the “two-network per step” overhead while retaining the quality–diversity knob via $w$-conditioning. 10

8.3.2 Stage-Two: Distill Sampling Steps (Progressive / Binary)

After stage-one, the student already matches the guided teacher prediction at each time, but still requires many steps if we sample with the original schedule. Stage-two applies standard step distillation: train a student to match a two-step DDIM trajectory segment of the (guided) teacher in one step, then repeat to halve the number of steps again (initializing each new student from its teacher). 10

Conceptually, guided distillation is therefore “trajectory distillation” in two layers:

Operator distillation (guidance): distill the combination rule \((\hat x^c,\hat x)\mapsto \hat x^w\) into a single network.

Time distillation (steps): distill multi-step solver trajectories into fewer steps.

In practice, the biggest win often comes from stage-one (saving ~2× compute per step), while stage-two provides the additional “few-step” acceleration. 10

8.3.3 A Canonical Training Algorithm (Template)

Below is a minimal, implementation-oriented template that captures the core algorithmic skeleton independent of specific papers.

Guided Distillation (Stage 1):

# Guided Distillation (Stage 1: Distilling CFG)

# Inputs:

# teacher: Pretrained model (capable of conditional & unconditional)

# student: Model taking w (guidance scale) as extra input

# w_min, w_max: Range of guidance scales to distill

for each training step:

# 1. Prepare data

x0, c ~ p_data(x, c) # Image and Text Condition

t ~ Uniform(0, T)

epsilon ~ N(0, I)

x_t = diffuse(x0, t, epsilon)

# 2. Sample a random guidance scale w

w ~ Uniform(w_min, w_max)

# 3. Compute Teacher's Guided Output

with no_grad:

# Get conditional and unconditional noise predictions

eps_cond = teacher(x_t, t, c)

eps_uncond = teacher(x_t, t, null_c)

# Apply Classifier-Free Guidance formula

eps_target = eps_uncond + w * (eps_cond - eps_uncond)

# (Optional) Convert to x0 space if distilling x0

x0_target = predict_x0_from_eps(x_t, t, eps_target)

# 4. Student Prediction

# Student explicitly takes 'w' as conditioning input

x0_student = student(x_t, t, c, w)

# 5. Loss

L = MSE(x0_student, x0_target)

update(student, L)

Guided Distillation (Stage 2):

# Guided Distillation — Stage Two: distill sampling steps (PD/TRACT/etc.)

#

# Treat the stage-one guided student as "teacher sampler" (with guidance baked in),

# then perform standard step-distillation to reduce step count.

def train_guided_step_distill(student_fewstep, teacher_guided_student, schedule, phases, optimizer):

# identical skeleton to PD, except teacher_step uses teacher_guided_student

# and student_step uses student_fewstep (both already include guidance behavior).

train_progressive_distillation(

student=student_fewstep,

teacher=teacher_guided_student,

schedule=schedule,

phases=phases,

optimizer=optimizer

)

Inference (Fast Generation). The primary advantage during inference is the elimination of the double-batching requirement inherent in Classifier-Free Guidance. Instead of evaluating the model twice (once for $\epsilon_{\text{cond}}$ and once for $\epsilon_{\text{uncond}}$) and manually combining them, you feed the noise $x_t$, the text condition $c$, and your desired guidance scale $w$ (e.g., $w=7.5$) directly into the student model. The student outputs the pre-combined, guided result in a single forward pass per timestep, effectively doubling the inference speed for any given sampler (e.g., DDIM or Euler).

9. Adversarial-Based Distillation

Adversarial distillation follows the principle “distill fast realism by adding a critic.” The central belief is that ultra-few-step (especially one-step) generators suffer from perceptual artifacts that are not fully penalized by teacher-matching losses alone, so a discriminator provides a complementary signal that sharply enforces high-frequency realism and data-manifold alignment.

The philosophy is hybrid: keep the teacher as a semantic / distributional supervisor while using adversarial training as a perceptual regularizer that compensates for the limited iterative correction budget. In practice, adversarial objectives are rarely used alone—they are layered on top of score/disttribution/trajectory losses to stabilize training and improve texture fidelity.

9.1 Adversarial Diffusion Distillation

While Progressive Distillation (Sec. 8) compresses multi-step sampling through supervised teacher-student regression, it still depends on explicit trajectory matching: the student must imitate the teacher’s denoising transitions.

Adversarial Diffusion Distillation (ADD) 11 proposes a fundamentally different perspective: rather than minimizing a reconstruction distance between teacher and student trajectories, the student is trained adversarially to generate outputs that are indistinguishable from real data, while remaining consistent with the diffusion process implied by the teacher.

9.1.1 Motivation: From Trajectory Matching to Perceptual Realism

Trajectory-based distillation implicitly assumes the teacher path is a good proxy for perceptual quality. But under aggressive compression (e.g., 1–4 steps), supervised matching tends to reproduce the teacher’s mean-like behavior, leading to blurred edges and weak high-frequency details. ADD injects a critic that explicitly penalizes “off-manifold” artifacts, while the teacher still anchors the student to the diffusion prior and the condition (text/image guidance).

9.1.2 Principle and Formulation

Let $x_T\sim\mathcal N(0,I)$ denote the initial noise and $\Phi_\text{teacher}$ the full diffusion sampler of teacher model (DDIM, ODE, or PF-ODE). ADD seeks to train a student generator $G_\phi(x_K;\,K\to 0)$ that approximates the teacher’s final output $\Phi_\text{teacher}(x_T;\,T\to0)$ in $K$ step ($K$ is small, \(K \ll T\)), while simultaneously fooling an adversarial discriminator $D_\psi(x)$. Its loss function consists of two parts.

1. Teacher Consistency Loss. To maintain semantic alignment with the diffusion manifold, ADD retains a lightweight teacher consistency term:

\[\mathcal L_{\text{distill}} = \mathbb E_{x_T} \left[\|G_\phi(x_K;\,K\to 0) - \Phi_\text{teacher}(x_T;\,T\to 0)\|_2^2 \right].\]This term ensures that the student’s outputs lie near the teacher’s sample space, preserving the diffusion prior.

2. Adversarial Loss. A discriminator $D_\psi$ is trained to differentiate real data $x_0 \sim p_\text{data}$ from generated samples $G_\phi(x_K;\,K\to 0)$. The standard non-saturating (or hinge) GAN loss is adopted:

\[\mathcal L_{\text{adv}}^G = -\mathbb E_{x_T\sim \mathcal N(0,I)} [\log D_\psi(G_\phi(x_K;\,K\to 0))].\]By optimizing $\mathcal L_{\text{adv}}^G$ jointly with $\mathcal L_{\text{distill}}$, the student learns to generate visually realistic samples that also align with the teacher’s denoising manifold.

The overall training objective combines both components with a weighting $\lambda_{\text{adv}}$:

\[\mathcal L_{\text{ADD}} = \mathcal L_{\text{distill}} +\lambda_{\text{adv}}\, \mathcal L_{\text{adv}}^G.\]During training, the discriminator $D_\psi$ is updated alternately to maximize $\mathcal L_D$.

\[\mathcal L_D = -\,\mathbb E_{x_0\sim p_\text{data}} [\log D_\psi(x_0)] -\,\mathbb E_{x_T\sim \mathcal N(0,I)} [\log(1 - D_\psi(G_\phi(x_K;\,K\to 0)))]\]9.1.3 Implementation Mechanism

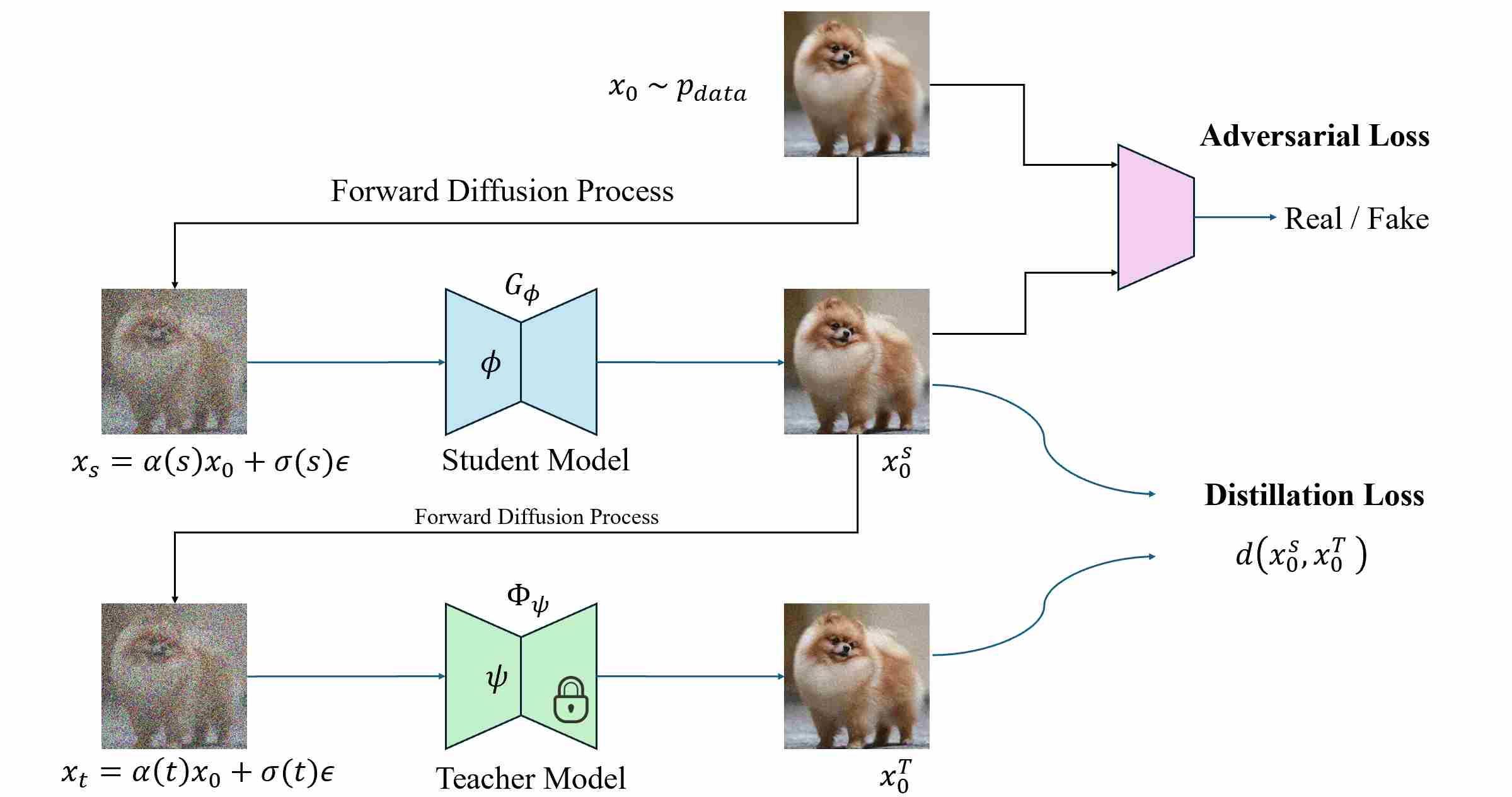

The training procedure can be express in the following figure.

During training, let $x_0 \sim p_{\text{data}}$.

Step 1: utilize forward diffusion process to add noise to clean image $x_0$.

\[x_s = \alpha(s)x_0 + \sigma(s)\epsilon ,\qquad \epsilon \sim \mathcal N(0,I).\]where $0 < s \leq K $.

step 2: The student first performs a s-step denoising:

\[x_0^{S}=G_\phi(x_s; s\to 0)\]This represents the image the student would produce during inference.

step 3: Instead of drawing real data $x_0$ again from the dataset, ADD reuses the student’s own output $x_0^{S}$ and applies forward diffusion:

\[x_t = \alpha(t) x_0^{S} + \sigma(t)\epsilon^{'} , \qquad \epsilon^{'} \sim \mathcal N(0,I).\]where $0 < t \leq T $. This design is crucial—the teacher is conditioned on the student’s current distribution rather than on real data. By doing so, the teacher supervises exactly the domain that the student will visit at inference time, forming a closed-loop, on-policy distillation process.

step 4: The teacher diffusion model, typically a pretrained DDIM or PF-ODE sampler, now starts from $x_t$ and performs full multi-step denoising:

\[x_0^{T}=\Phi_{\text{teacher}}(x_t;\,t\to 0),\]yielding a high-quality reconstruction of what that noisy sample should look like under the teacher’s diffusion dynamics.

step 5: The pair $(x_0^{S},,x_0^{T})$ defines the distillation target, while an adversarial discriminator evaluates realism relative to real data $x_0 \sim p_{\text{data}}$.

At inference, only the student is used: one forward pass from Gaussian noise $x_T$ directly yields $x_0^S$. The teacher is entirely discarded.

9.1.4 A Canonical Training Algorithm (Template)

Below is a minimal, implementation-oriented template that captures the core algorithmic skeleton independent of specific papers.

# ADD — Adversarial Diffusion Distillation (on-policy)

#

# student: G_φ(x_s; s->0) (few-step sampler or 1-step)

# teacher: Φ_teacher(x_t; t->0) (full sampler)

# discriminator: D_ψ(·)

def train_add(student_G, teacher_sampler, discriminator_D, schedule, optimizer_G, optimizer_D,

lambda_adv=1.0, s_max_steps=K_student):

for it in range(num_train_steps):

# --------- sample real data ----------

x0_real = sample_data()

# --------- Step 1: forward diffuse to x_s ----------

s = sample_time_in_range(0, s_max_steps) # your "0 < s <= K"

eps = sample_gaussian_like(x0_real)

x_s = schedule.alpha(s) * x0_real + schedule.sigma(s) * eps

# --------- Step 2: student denoise (inference-like) ----------

x0_S = student_G(x_s, s, 0, schedule) # denotes G_φ(x_s; s->0)

# --------- Step 3: re-noise student output (on-policy) ----------

t = sample_time_in_range(0, T)

eps2 = sample_gaussian_like(x0_S)

x_t = schedule.alpha(t) * x0_S + schedule.sigma(t) * eps2

# --------- Step 4: teacher denoise from x_t ----------

with no_grad():

x0_T = teacher_sampler(x_t, t, 0, schedule) # Φ_teacher(x_t; t->0)

# --------- Step 5: distillation + adversarial ----------

# distillation/regression term (L2/L1/LPIPS etc.)

L_distill = distance(x0_S, stopgrad(x0_T))

# GAN losses (non-saturating)

# L_adv^G = -E log D(x0_S)

# L_D = -E log D(x0_real) - E log(1 - D(x0_S))

D_real = discriminator_D(x0_real)

D_fake = discriminator_D(x0_S.detach())

L_D = -(log(D_real) + log(1.0 - D_fake)).mean()

optimizer_D.zero_grad()

L_D.backward()

optimizer_D.step()

# generator adversarial

D_fake_for_G = discriminator_D(x0_S)

L_adv_G = -log(D_fake_for_G).mean()

L_G = L_distill + lambda_adv * L_adv_G

optimizer_G.zero_grad()

L_G.backward()

optimizer_G.step()

Inference (Fast Generation). ADD is specifically designed for single-step or ultra-low-step generation. At inference, the discriminator and the teacher model are completely discarded. You simply draw random Gaussian noise $x_T$ and pass it through the student model once. Thanks to the adversarial training, the student directly outputs a high-fidelity, perceptually sharp image $x_0$ without needing an iterative denoising loop or a complex scheduler. If trained for multi-step (e.g., 4 steps), you would run the student 4 times, but for the standard 1-step ADD, it is a direct mapping from noise to image.

9.2 Progressive Adversarial Diffusion Distillation (PADD)

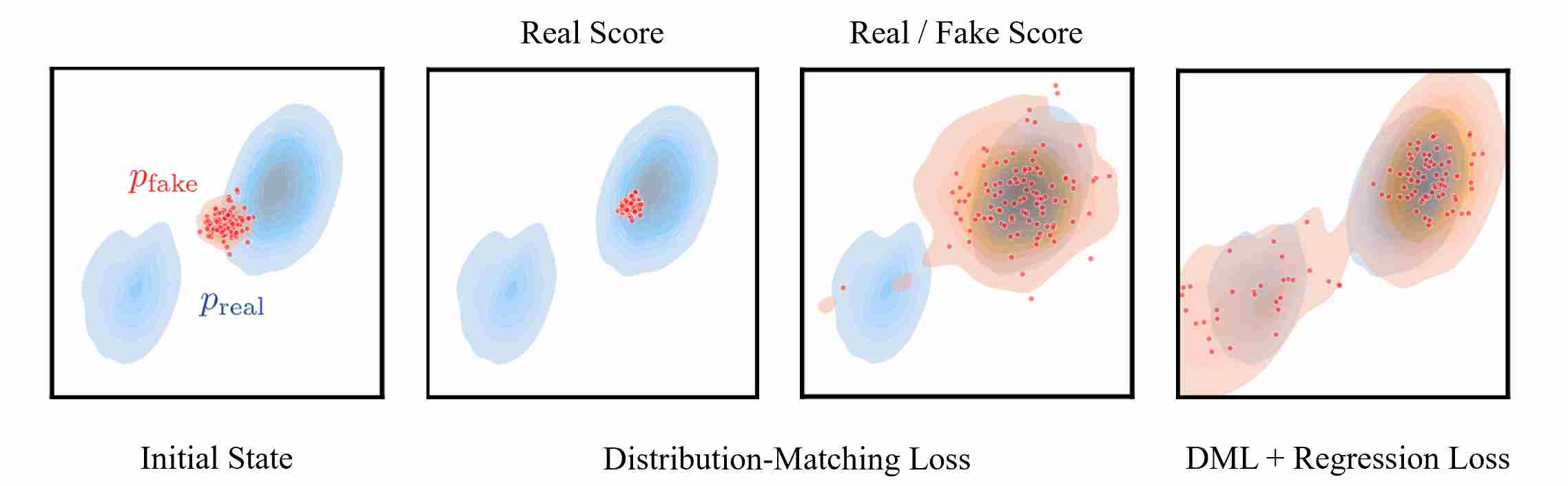

Progressive Adversarial Diffusion Distillation (PADD) 12 can be read as a targeted “fix” of ADD/SDXL-Turbo-style adversarial distillation: rather than training the student to jump to the ODE endpoint at every step (and then re-noising again for multi-step sampling), PADD trains a student that directly approximates the teacher’s probability flow transition between two timesteps, and uses an adversarial signal to recover sharpness.

Conceptually, PADD = (i) progressive solver distillation (stability) + (ii) flow-preserving adversarial constraint (compatibility) + (iii) mode-coverage relaxation (artifact mitigation).

9.2.1 Principle: distill a flow-preserving jump operator

Let a teacher diffusion sampler define a deterministic probability-flow (e.g., DDIM / PF-ODE) update operator. For training, we sample a clean latent/image pair \((x_0, c)\) and noise \(\epsilon\sim\mathcal N(0,I)\), then jump to an arbitrary timestep \(t\) to re-noise:

\[x_t = \mathrm{forward}(x_0,\epsilon,t).\]To distill a large step of size \(n_s\), the teacher rolls out \(n\) small solver steps (step size \(s\), so that \(n_s=n\cdot s\)) to obtain the target \(x_{t-n_s}\). In “direction-field” form (as in PF-ODE / DDIM-style updates), denote the teacher field by \(u_t = f_{\text{teacher}}(x_t,t,c)\), and a deterministic move operator \(\mathrm{move}(\cdot)\) that maps \((x_t,u_t)\) to a new time \(t'\):

\[\begin{aligned} u_t & = f_{\text{teacher}}(x_t,t,c),\\[10pt] \Longrightarrow\quad x_{t-s} & = \mathrm{move}(x_t,u_t,t,t-s), \\[10pt] \Longrightarrow\quad \quad \quad \ldots \\[10pt] \Longrightarrow\quad x_{t-n_s} & = \mathrm{move}(x_{t-(n-1)s},u_{t-(n-1)s},t-(n-1)s,t-n_s). \end{aligned}\]The student is trained to predict a single field \(\hat u_t=f_{\text{student}}(x_t,t,c)\) that directly “jumps” to \(t-n_s\):

\[\hat u_t = f_{\text{student}}(x_t,t,c),\qquad \hat x_{t-n_s}=\mathrm{move}(x_t,\hat u_t,t,t-n_s).\]A baseline progressive distillation would minimize the regression objective

\[\mathcal L_{\text{mse}} = \left\|\hat x_{t-n_s}-x_{t-n_s}\right\|_2^2.\]However, in the few-step regime, pure MSE tends to yield overly smoothed / blurry results. PADD replaces the regression supervision with an adversarial objective while keeping the distillation target tied to the teacher’s flow.

9.2.2 Flow-preserving conditional adversarial objective

PADD defines a discriminator that classifies whether \(x_{t-n_s}\) comes from the teacher transition or from the student transition, conditioned on the same starting state \(x_t\) (and prompt \(c\)):

\[D(x_t,x_{t-n_s},t,t-n_s,c)\in[0,1].\]With non-saturating GAN losses, the teacher sample is labeled “real” and the student sample is labeled “fake”. Define

\[p = D(x_t,x_{t-n_s},t,t-n_s,c),\qquad \hat p = D(x_t,\hat x_{t-n_s},t,t-n_s,c).\]Then the discriminator loss and generator (student) loss are:

\[\mathcal L_D = -\log(p) - \log(1-\hat p),\] \[\mathcal L_G = -\log(\hat p).\]Why conditioning on (x_t) matters. Under probability-flow sampling, the teacher’s mapping from \(x_t\) to \(x_{t-n_s}\) is (approximately) deterministic. If the discriminator sees both \(x_t\) and the candidate next state, it is forced to judge whether the pair \((x_t\!\to\!x_{t-n_s})\) follows the teacher’s same flow; consequently, the student must imitate the same flow to fool \(D\). This is the key ingredient for (a) multi-step sampling without re-noising hacks and (b) better compatibility with LoRA / ControlNet-like modules that were trained under the teacher’s dynamics.

9.2.3 Discriminator design in latent space

Instead of using an external image encoder (e.g., DINO) and discriminating only at \(t=0\), PADD reuses the teacher diffusion U-Net encoder as a discriminator backbone (latent-native, timestep-aware, prompt-aware). Concretely, let \(d(\cdot)\) be the copied encoder+midblock. PADD passes \(x_{t-n_s}\) and \(x_t\) through the shared backbone, concatenates their midblock features, and applies a small head:

\[D(x_t,x_{t-n_s},t,t-n_s,c) \equiv \sigma\Big(\mathrm{head}\big(d(x_{t-n_s},t-n_s,c),\; d(x_t,t,c)\big)\Big).\]This makes the discriminator scalable to high resolutions and valid across noise levels.

9.2.4 Relax mode coverage to remove “Janus” artifacts

A subtle but important phenomenon in adversarial distillation is the Janus artifact: the teacher can make sharp layout changes between nearby noise inputs, but a small-step student lacks the capacity to reproduce such “sudden turns”. Under a strictly flow-preserving adversarial objective, the student may sacrifice semantic correctness to preserve sharpness, producing conjoined / duplicated body parts.

PADD mitigates this by relaxing the flow-preservation constraint after the main adversarial training: it finetunes with a discriminator that no longer conditions on \(x_t\),

\[D'(x_{t-n_s},t-n_s,c)\equiv \sigma\Big(\mathrm{head}(d(x_{t-n_s},t-n_s,c))\Big),\]so the objective emphasizes per-sample realism more than strict transition consistency. In practice, at every progressive stage, PADD trains first with \(D\) (conditional, flow-preserving) and then finetunes with \(D'\) (unconditional, artifact removal).

9.2.5 A Canonical Training Algorithm (Template)

Below is a minimal, implementation-oriented template that captures the core algorithmic skeleton independent of specific papers.

Stage A: Warm-up (MSE distillation)

# PADD — Progressive Adversarial Diffusion Distillation

#

# Key objects:

# teacher f_T (frozen), student f_S (trainable)

# forward(x0, ε, t) -> x_t

# δ_T: teacher transition (rollout) x_t -> x_{t'}^T

# δ_S: student transition (one-jump) x_t -> x̂_{t'}

# D: conditional discriminator D(x_t, x_{t'}, t, t', c)

# D': unconditional discriminator D'(x_{t'}, t', c)

#

# Non-saturating GAN:

# L_D = -log D(real) - log(1 - D(fake))

# L_G = -log D(fake)

#

# Stage plan (paper recipe): 128→32 (MSE), then 32→8→4→2→1 (GAN), each stage: D then D'

def PADD_train_template():

# ------------------------------------------------------------

# Stage A: Warm-up (MSE distillation), e.g., 128 -> 32

# ------------------------------------------------------------

init student f_S ← teacher f_T

for it in range(N_mse):

sample (x0, c), sample ε, sample adjacent (t > t') from grid(32)

x_t = forward(x0, ε, t) # optional: if t==T use pure noise (schedule fix)

x_{t'}^T = δ_T(x_t, t→t', c) # teacher rollout target

x̂_{t'} = δ_S(x_t, t→t', c) # student one-jump

update f_S by minimizing ||x̂_{t'} - stopgrad(x_{t'}^T)||^2

Stage B: Conditional Progressive Adversarial Distillation

# Given: teacher (frozen), student (trainable), discriminator D (trainable)

# Hyper: n, s (so jump = n*s), timestep sampler, noise sampler

for each iteration:

x0, c = sample_data() # latent of image + text condition

t = sample_timestep_for_this_stage()

eps = torch.randn_like(x0)

# (optional) schedule fix: if t == T, use pure noise as input

xt = Forward(x0, eps, t) # Eq(24) hack for t=T

# teacher target: run n small steps (size s) to reach t-ns

x_teacher = teacher_multistep(teacher, xt, t, n, s, c) # gives x_{t-ns}

# student one jump

x_fake = student_jump(student, xt, t, n, s, c) # gives x̂_{t-ns}

# --- update discriminator (non-saturated GAN) ---

p_real = D(xt, x_teacher, t, t-n*s, c)

p_fake = D(xt, x_fake.detach(), t, t-n*s, c)

L_D = -log(p_real) - log(1 - p_fake)

step(D, L_D)

# --- update student (generator) ---

p_fake_for_G = D(xt, x_fake, t, t-n*s, c)

L_G = -log(p_fake_for_G)

step(student, L_G)

Stage B: UnConditional Progressive Adversarial Distillation

# After conditional stage converges

for each iteration:

x0, c = sample_data()

t = sample_timestep_for_this_stage()

eps = randn()

xt = Forward(x0, eps, t)

x_teacher = teacher_multistep(...)

x_fake = student_jump(...)

# discriminator without conditioning on xt

p_real = D_prime(x_teacher, t-n*s, c)